Serialization, loading and saving of zfit objects#

The long-term goal is to be able to save and load zfit objects, such as models, spaces, parameters, etc. This is not yet fully implemented, but some parts are already available, some stable, some more experimental.

Overview:

Binary (pickle) loading and dumping of (frozen)

FitResultis fully availableHuman-readable serialization (also summarized under HS3) of

parameters and models is available, but not yet stable

losses and datasets are not yet available

import os

import pathlib

import pickle

from pprint import pprint

import mplhep

import numpy as np

import zfit

import zfit.z.numpy as znp

from matplotlib import pyplot as plt

from zfit import z

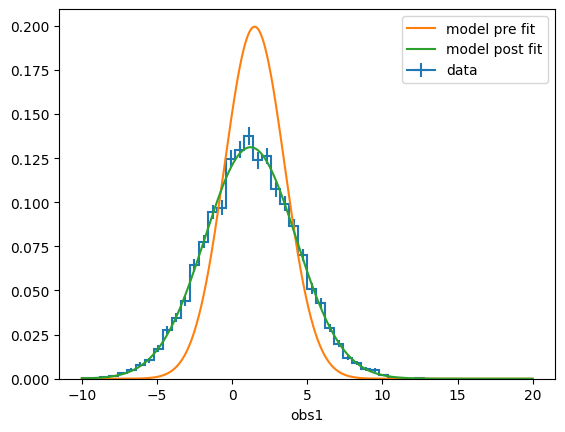

mu = zfit.Parameter("mu", 1.2, -4, 5)

sigma = zfit.Parameter("sigma", 3, 0, 10)

obs = zfit.Space("obs1", -10, 20)

model = zfit.pdf.Gauss(mu=mu, sigma=sigma, obs=obs)

data = model.sample(10000)

loss = zfit.loss.UnbinnedNLL(model=model, data=data)

minimizer = zfit.minimize.Minuit()

x = np.linspace(*obs.v1.limits, 1000)

mu.set_value(1.5)

sigma.set_value(2)

mplhep.histplot(data.to_binned(50), density=True, label="data")

plt.plot(x, model.pdf(x), label="model pre fit")

result = minimizer.minimize(loss)

plt.plot(x, model.pdf(x), label="model post fit")

plt.legend()

<matplotlib.legend.Legend at 0x7f2d6a5d2610>

result.freeze()

dumped_result = pickle.dumps(result)

loaded_result = pickle.loads(dumped_result)

mu.set_value(0.42)

print(f"mu before: {mu.value()}")

zfit.param.set_values(params=model.get_params(), values=loaded_result)

print(f"mu after: {mu.value()}, set to result value: {loaded_result.params[mu]['value']}")

mu before: 0.42

mu after: 1.224895093745138, set to result value: 1.224895093745138

Human-readable serialization (HS3)#

WARNING: this section is unstable and, apart from dumping for publishing on a “if it works, great” basis, everything else is recommended for power users only and will surely break in the future.

HS3 is the “hep-statistics-serialization-standard”, that is currently being developed and aims to provide a human-readable serialization format for loading and dumping of the likelihood. It is not stable and neither is the implementation of it in zfit (which also doesn’t follow it strictly for different reasons currently).

We can either dump objects in the library directly, or create a complete dump to an HS3-like format.

model.to_dict()

{'type': 'Gauss',

'name': 'Gauss',

'x': {'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0},

'mu': {'type': 'Parameter',

'name': 'mu',

'value': 1.224895093745138,

'min': -4.0,

'max': 5.0,

'step_size': 0.01,

'floating': True},

'sigma': {'type': 'Parameter',

'name': 'sigma',

'value': 3.0411914509561657,

'min': 0.0,

'max': 10.0,

'step_size': 0.01,

'floating': True}}

mu.to_dict()

{'type': 'Parameter',

'name': 'mu',

'value': 1.224895093745138,

'min': -4.0,

'max': 5.0,

'step_size': 0.01,

'floating': True}

obs.to_dict()

{'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0}

Recreate the object#

We can also recreate the object from the dictionary. As a simple example, let’s do this for the model.

gauss2 = model.from_dict(model.to_dict()) # effectively creates a copy (parameters are shared!)

gauss2

<zfit.<class 'zfit.models.dist_tfp.Gauss'> params=[mu, sigma]

This is a bit of cheating, since we could use the model itself to use the from_dict (or more generally, the from_* methods). More generally, in this case, we need to know the class of the object (currently) in order to convert it back (this is not the case for the HS3 dumping below).

gauss3 = zfit.pdf.Gauss.from_dict(model.to_dict())

Dumping and loading#

These representations can be converted to anything JSON/YAML like. In fact, the objects already offer out-of-the-box some conversion methods.

sigma.to_json()

'{"type": "Parameter", "name": "sigma", "value": 3.0411914509561657, "min": 0.0, "max": 10.0, "step_size": 0.01, "floating": true}'

sigma.to_yaml()

'\'{"type": "Parameter", "name": "sigma", "value": 3.0411914509561657, "min": 0.0, "max":\n 10.0, "step_size": 0.01, "floating": true}\'\n'

Serializing large datasets#

We can also serialize data objects. However, binned data can be large (i.e. in the millions) and are theferore not suitable to be stored in plain text (which requires typically a factor of 10 more space). Therefore, we can use the to_asdf method to store the data in a binary format. This will convert any numpy-array into a binary format while just keeping a reference instead.

data.to_dict()

{'type': 'Data',

'data': array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]]),

'space': [{'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0}]}

As we can see, naturally the whole data array is saved. Trying to convert this to JSON or YAML will fail as these dumpers by default cannot handle numpy arrays (one could convert the numpy arrays to lists, but the problem with space will remain).

try:

data.to_json()

except TypeError as error:

print(error)

The object you are trying to serialize contains numpy arrays. This is not supported by json. Please use `to_asdf` (or `to_dict)` instead.

Let’s follow the advice!

data_asdf = data.to_asdf()

data_asdf

<asdf._asdf.AsdfFile at 0x7f2d6828c150>

ASDF format#

The ASDF format stands for Advanced Scientific Data Format. It is a mixture of yaml and a binary format that can store arbitrary data, including numpy arrays, pandas dataframes, astropy tables, etc.

Two attributes are convenient to know:

tree: returns the dict representation of the datawrite_to(path): writes the data to a file inpath

data_asdf.tree

{'type': 'Data',

'data': array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]]),

'space': [{'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0}]}

data_asdf.write_to("data.asdf") # Will create a file in the current directory

We can inspect the file using the head command to printout the first 25 lines (out of a total of about 471!). As we can see, the beginning is a yaml representation of the data, while the end is a binary representation of the data (which produces weird signs). The file is not human-readable, but can be loaded by any ASDF library.

!head -25 data.asdf

#ASDF 1.0.0

#ASDF_STANDARD 1.5.0

%YAML 1.1

%TAG ! tag:stsci.edu:asdf/

--- !core/asdf-1.1.0

asdf_library: !core/software-1.0.0 {author: The ASDF Developers, homepage: 'http://github.com/asdf-format/asdf',

name: asdf, version: 3.2.0}

history:

extensions:

- !core/extension_metadata-1.0.0

extension_class: asdf.extension._manifest.ManifestExtension

extension_uri: asdf://asdf-format.org/core/extensions/core-1.5.0

software: !core/software-1.0.0 {name: asdf, version: 3.2.0}

data: !core/ndarray-1.0.0

source: 0

datatype: float64

byteorder: little

shape: [10000, 1]

space:

- {max: 20.0, min: -10.0, name: obs1, type: Space}

type: Data

...

�BLK08�8�8�hmO5������'^�Y8ᄚ"I�K]� v�R��Kx��N �GT���u�}8@2�p����'�����?8����0�?�����?�z<�ll@�'�>�?

7���@�I�ʷ�?3�|�@(Sl���?@�

���4U���aӿ�]\^M@t�EԿ��Op�@~��vؕ�?�A5W��\l

��@�=������6Z������÷R��]��r@*�A�Q*@��|*�������V>v�?�2����?�

W�*3��� 7ē�?�'

�\�?=�t�#�

@��-_g��?�xt{�iؿt���@!"#(�V@��

OM����_�

� @�&�uݸ

�H!���

@�������?w�7q��O��)�� �i��o���sd

����^�`k�@�s��p�?���^�?�z����@�N�T�@��X��@��P�${

@ �����?�3y�(@@���}S�

@�57}��@,[F���?ci�yS���_yg�r��셷����\@�

�?Z}�K��N�+�y�?�`�~6x��n�>��6�!��L�?�Ub���?��m �O@�2��gN@;�MG6�

@����^� @���O�N�?�M>�aM@�������?��a��@

�Aɿ��*d/��@ V�Ƿ������؝����VVL2���KSa7@�Z5q�a

@�DJ@��@���Zf��?Yg� ��?�

!wc -l data.asdf # the file is about 471 lines long, filled with binary

429 data.asdf

Loading can be done using the asdf library directly too.

import asdf

with asdf.open("data.asdf") as f:

tree = f.tree

data = zfit.Data.from_asdf(f)

data.value()

<tf.Tensor: shape=(10000, 1), dtype=float64, numpy=

array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]])>

# cleanup of the file

import pathlib

pathlib.Path("data.asdf").unlink()

HS3 serialization#

To convert our objects into a HS3-like format, we can use the following functions. The format is not yet stable and will change in the future.

It is therefore recommended to try out: if it works, great. If it errors, fine. Don’t expect it to be able to load again in the future, but if it works, it’s nice for publication

Objects#

We can serialize the objects itself, PDFs, spaces etc. The difference to the above mentioned serialization with to_dict is that the HS3 serialization is more verbose and contains more information, such as metadata and fields for other objects (e.g. the parameters of a PDF). It will also fill in some of the fields by extracting the information from the object.

zfit.hs3.dumps(model)

{'metadata': {'HS3': {'version': 'experimental'},

'serializer': {'lib': 'zfit', 'version': '0.20.1'}},

'distributions': {'Gauss': {'type': 'Gauss',

'name': 'Gauss',

'x': {'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0},

'mu': 'mu',

'sigma': 'sigma'}},

'variables': {'mu': {'name': 'mu',

'value': 1.224895093745138,

'min': -4.0,

'max': 5.0,

'step_size': 0.01,

'floating': True},

'sigma': {'name': 'sigma',

'value': 3.0411914509561657,

'min': 0.0,

'max': 10.0,

'step_size': 0.01,

'floating': True},

'obs1': {'name': 'obs1', 'min': -10.0, 'max': 20.0}},

'loss': {},

'data': {},

'constraints': {}}

hs3obj = zfit.hs3.loads(zfit.hs3.dumps(model))

list(hs3obj['distributions'].values())

[<zfit.<class 'zfit.models.dist_tfp.Gauss'> params=[mu, sigma]]

Publishing#

While the format is being improved constantly, a likelihood created with this format can in principle be published, maybe alongside the paper. If we may want to omit the data and only publish the model, we can just create a HS3 object with the pdf instead of the likelihood.

hs3dumped = zfit.hs3.dumps(model)

pprint(hs3dumped)

{

'constraints'

:

{}

,

'data'

:

{}

,

'distributions'

:

{

'Gauss'

:

{

'mu'

:

'mu'

,

'name'

:

'Gauss'

,

'sigma'

:

'sigma'

,

'type'

:

'Gauss'

,

'x'

:

{

'max'

:

20.0

,

'min'

:

-10.0

,

'name'

:

'obs1'

,

'type'

:

'Space'

}

}

}

,

'loss'

:

{}

,

'metadata'

:

{

'HS3'

:

{'version': 'experimental'}

,

'serializer'

:

{'lib': 'zfit', 'version': '0.20.1'}

}

,

'variables'

:

{

'mu'

:

{

'floating'

:

True

,

'max'

:

5.0

,

'min'

:

-4.0

,

'name'

:

'mu'

,

'step_size'

:

0.01

,

'value'

:

1.224895093745138

}

,

'obs1'

:

{'max': 20.0, 'min': -10.0, 'name': 'obs1'}

,

'sigma'

:

{

'floating'

:

True

,

'max'

:

10.0

,

'min'

:

0.0

,

'name'

:

'sigma'

,

'step_size'

:

0.01

,

'value'

:

3.0411914509561657

}

}

}

hs3dumped = zfit.hs3.dumps(loss)

pprint(hs3dumped)

{

'constraints'

:

{}

,

'data'

:

{

None

:

{

'data'

:

array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]])

,

'space'

:

[

{

'max'

:

20.0

,

'min'

:

-10.0

,

'name'

:

'obs1'

,

'type'

:

'Space'

}

]

,

'type'

:

'Data'

}

}

,

'distributions'

:

{

'Gauss'

:

{

'mu'

:

'mu'

,

'name'

:

'Gauss'

,

'sigma'

:

'sigma'

,

'type'

:

'Gauss'

,

'x'

:

{

'max'

:

20.0

,

'min'

:

-10.0

,

'name'

:

'obs1'

,

'type'

:

'Space'

}

}

}

,

'loss'

:

{

'UnbinnedNLL'

:

{

'constraints'

:

[]

,

'data'

:

[

{

'data'

:

array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]])

,

'space'

:

[

{

'max'

:

20.0

,

'min'

:

-10.0

,

'name'

:

'obs1'

,

'type'

:

'Space'

}

]

,

'type'

:

'Data'

}

]

,

'model'

:

[

{

'mu'

:

'mu'

,

'name'

:

'Gauss'

,

'sigma'

:

'sigma'

,

'type'

:

'Gauss'

,

'x'

:

{

'max'

:

20.0

,

'min'

:

-10.0

,

'name'

:

'obs1'

,

'type'

:

'Space'

}

}

]

,

'options'

:

{}

,

'type'

:

'UnbinnedNLL'

}

}

,

'metadata'

:

{

'HS3'

:

{'version': 'experimental'}

,

'serializer'

:

{'lib': 'zfit', 'version': '0.20.1'}

}

,

'variables'

:

{

'mu'

:

{

'floating'

:

True

,

'max'

:

5.0

,

'min'

:

-4.0

,

'name'

:

'mu'

,

'step_size'

:

0.01

,

'value'

:

1.224895093745138

}

,

'obs1'

:

{'max': 20.0, 'min': -10.0, 'name': 'obs1'}

,

'sigma'

:

{

'floating'

:

True

,

'max'

:

10.0

,

'min'

:

0.0

,

'name'

:

'sigma'

,

'step_size'

:

0.01

,

'value'

:

3.0411914509561657

}

}

}

hs3dumped

{'metadata': {'HS3': {'version': 'experimental'},

'serializer': {'lib': 'zfit', 'version': '0.20.1'}},

'distributions': {'Gauss': {'type': 'Gauss',

'name': 'Gauss',

'x': {'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0},

'mu': 'mu',

'sigma': 'sigma'}},

'variables': {'mu': {'name': 'mu',

'value': 1.224895093745138,

'min': -4.0,

'max': 5.0,

'step_size': 0.01,

'floating': True},

'sigma': {'name': 'sigma',

'value': 3.0411914509561657,

'min': 0.0,

'max': 10.0,

'step_size': 0.01,

'floating': True},

'obs1': {'name': 'obs1', 'min': -10.0, 'max': 20.0}},

'loss': {'UnbinnedNLL': {'type': 'UnbinnedNLL',

'model': [{'type': 'Gauss',

'name': 'Gauss',

'x': {'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0},

'mu': 'mu',

'sigma': 'sigma'}],

'data': [{'type': 'Data',

'data': array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]]),

'space': [{'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0}]}],

'constraints': [],

'options': {}}},

'data': {None: {'type': 'Data',

'data': array([[-2.5358869 ],

[-0.54566938],

[-0.92093449],

...,

[-2.67195615],

[ 2.14358726],

[ 2.64176896]]),

'space': [{'type': 'Space', 'name': 'obs1', 'min': -10.0, 'max': 20.0}]}},

'constraints': {}}

zfit.hs3.loads(hs3dumped)

{'variables': {'mu': <zfit.Parameter 'mu' floating=True value=1.225>,

'sigma': <zfit.Parameter 'sigma' floating=True value=3.041>},

'data': {None: <zfit.Data: Data obs=('obs1',) shape=(10000, 1)>},

'constraints': {},

'distributions': {'Gauss': <zfit.<class 'zfit.models.dist_tfp.Gauss'> params=[mu, sigma]},

'loss': {'UnbinnedNLL': <UnbinnedNLL model=['Gauss'] data=[None] constraints=[] >},

'metadata': {'HS3': {'version': 'experimental'},

'serializer': {'lib': 'zfit', 'version': '0.20.1'}}}